Amazon cloud Managed Workflows for Apache Airflow (MWAA) is a managed service by AWS which makes it simpler to set up and run end-to-end data pipelines in the cloud at scale. An open-source application called Apache Airflow is used to programmatically author, schedule, and keep track of “workflows,” which are collections of processes and tasks. Using Python and Airflow, you can build processes using Amazon MWAA without having to worry about scalability, availability, or security of the underlying infrastructure. To assist you to get quick access to your data while maintaining security, Amazon MWAA integrates with AWS security services and extends its workflow execution capacity automatically to meet your demands.

In this blog, we will see how to set up an Amazon MWAA environment.

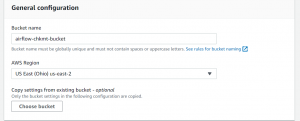

Step1: Create an S3 bucket

- We require an Amazon S3 bucket to store Apache Airflow Directed Acyclic Graphs (DAGs), custom plugins, and Python dependencies.

- Before creating a bucket make sure that it is in the same region, in which you will set up MWAA.

- Go to the S3 console and click Create bucket.

- Select a unique name for your bucket with ‘airflow-‘ prefix.

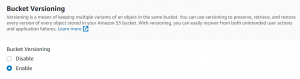

- Block all public access and enable versioning.

- Click Create bucket.

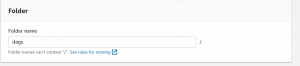

- Now go to your bucket and create a dags folder to store DAG files.

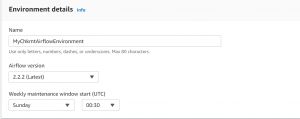

Step2: Create Airflow environment

- Go to Managed Apache Airflow dashboard.

- Click Create environment.

- Select name and Airflow version.

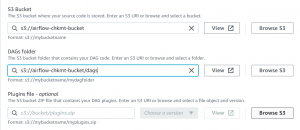

- Next select S3 bucket and dag folder.

- Click Next.

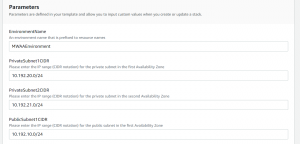

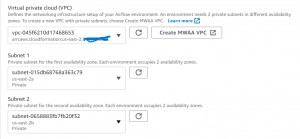

- Now click Create MWAA VPC. You will be redirected to Cloudformation. Click on Create Stack.

- Select the VPC.

- Under web server access select your preferred access mode.

- With a private network, you can limit the access to Apache Airflow to the users within your VPC, that have granted IAM permission.

- With public network, Apache Airflow can be accessed over internet.

- Under Security group select Create new security group.

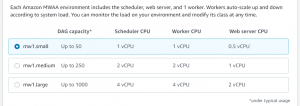

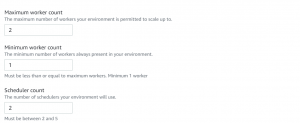

- Select Environment class, maximum and minimum worker count, and scheduler count.

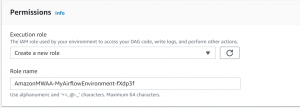

- Under permissions, select Create a new role.

- Click Next, review and click Create.

- It will take 15 to 20 minutes for the Apache Airflow environment to be created.

- Click on the Airflow UI link to access the UI.

- Click Sign in With AWS Management Console.

- Now upload your DAG file in the dag folder of your S3 bucket.

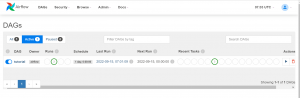

- Wait for a few seconds and refresh airflow and you will see your DAG on UI.

- Click run to trigger your DAG.

Please contact our DevOps engineering team if you have anything related to cloud infrastructure to be discussed.